When looking at a website’s architectural issues, there is no bigger benefit than ensuring your internal navigation and links are set up to ensure proper search engine spidering. This helps give visitors the ability to find information they need quickly and effectively. There is also no bigger breakdown in site architecture than when your navigation fails at helping visitors navigate through the site, or effectively blocks search engines from indexing your content.

When looking at a website’s architectural issues, there is no bigger benefit than ensuring your internal navigation and links are set up to ensure proper search engine spidering. This helps give visitors the ability to find information they need quickly and effectively. There is also no bigger breakdown in site architecture than when your navigation fails at helping visitors navigate through the site, or effectively blocks search engines from indexing your content.

All said and done, it doesn’t matter how perfectly your site is optimized if your site navigation fails to get searchers and search engines to your content. Your optimization efforts are doomed to failure.

Most people think of their website’s navigation as being little more than the main navigation that typically displays at the top or right side of each web page. That’s a significant part of it, but, in truth, there is a lot to know about how every link on your site should work in order to maintain a structurally sound, search engine friendly, and user-optimized website. But the main navigation is a good place to start…

Build an Efficient Navigational Structure

When it comes to navigation, what is “efficient” for one site may not be efficient for another. Each site is unique and must have unique navigational characteristics. Here are some points to consider:

- Should your navigation be on the top or the side?

- Or a combination of both?

- Should you include main categories only or sub-categories as well?

- Should you use drop downs or fly out menus?

- Do you have enough room to get your key pages in the navigation?

- If not, what has to be moved “off” the main navigation?

- What other information needs to be presented in the navigational area?

These are just a few key questions that will need to be answered before determining the best navigational experience to create for your site visitors.

However you choose to layout your site navigation, categories and sub-categories, there are some “essential” navigational items to consider:

Logo image

This may be stating the obvious, but you should always include your logo at the top of every page of your site. This is your primary site identifier and what gives the visitor continuity as they navigate from page to page.

Home link

Not only should your image link back to your home page, but you need another “home” link that does the same job. Not everyone knows images link back to home so including this obvious link ensures anyone can find their way there.

About Us link

Be sure you don’t make it too difficult for people to learn more about you. Including a link to your about us page from your main navigation ensures that you’re don’t give the impression you’re trying to hide who you really are. It’s a credibility and trust issue.

Contact link

Provide a clear and obvious link back to your contact us page so visitors don’t have to dig to find it. Hiding contact information is a common frustration to visitors. While it might cut down on the “unnecessary” calls, it also cuts down on sales from visitors who won’t do business with someone they can’t easily communicate with.

Phone number

Many businesses don’t want to post their phone number, instead trying to redirect visitors to web forms. This can reduce the need for manpower, but some customers need to feel secure that if they have a problem, a real person can be reached. Forms and email leave too much room for non-responsiveness for some people’s liking.

Search bar

Giving visitors a way to search your site via in-site search allows them to bypass the “search by navigation” option and get directly to the information they are looking for. Every click eliminated increases your conversion rate exponentially.

Checkout/basket link

If you run an e-commerce site, having shopping cart/checkout/basket links helps keep visitors engaged in the shopping process, more easily converting them from shoppers to customers.

Navigation Design Matters

The included elements of your navigation are only one aspect of building an effective primary navigational structure. How those elements all function together make a big difference as well. Recently there has been a move by web designers to try to cram more and more links into their main navigation options, reducing the number of clicks it takes to get to the content. Reducing clicks is almost always a good thing, but sometimes the needs of the visitor can get in the way of the overall site performance.

There are two types of navigational menus that I’m not a very big fan of, typically because when incorrectly implemented, it can cause problems for both searchers and search engines.

Sitemap Menus

A sitemap menu is essentially a top-tier navigation menu that has way too many links (i.e. a link to almost every page of the website.) This is usually done via drop down or fly out menus. The problem with sitemap menus is that by linking to every page from every page there is virtually no page hierarchy or segmentation. Sub-categories are determined to be on the same level as their parent category. Visually we see this, but the search engines don’t. This can pose problems when you want to use that hierarchy for good site architecture.

Home Depot uses flyouts to provide a link to virtually every category and sub-category on their site.

Flyout Menus

The problem with flyout menus is they can create usability issues as people try to navigate through the site. Usually what happens is as the visitor places their mouse over a category, then then have to move it to the right to get to the sub-categories that have just appeared. As they do, if the visitor accidentally moves their mouse too high or too low they lose the flyout. So frustrating! In the image example above, Home Depot fixed this usability issue by creating a delay for the flyout menu to close when the visitor moves their mouse off the category. This delay allows for the accidental moving of the mouse off the menu without the menu closing on them too quickly.

Minimize Footer Navigation Links

In the early days of web design, footer links were used to duplicate the main navigation in case the visitor had images turned off. Today, very few navigation menus use images so there is no need for this duplication. Unfortunately, for many site developers, the practice still holds.

Recently the footer has been used as an alternative to having a sitemap navigation in the main menu. Instead, it’s just created in the footer navigation menu. Unfortunately, the problems are the same, regardless of where you do it.

The Home Depot doesn’t have EVERY link in their footer, but it sure is a big list that could easily be truncated.

The best use for your footer navigation is to have a few key pages and links that should be accessible without interfering with the visitor’s primary shopping experience. It’s really a good place for some housekeeping links. But again, no need to display them all, just get visitors to the main pages and let them find the links from there.

Institute Site-Wide Breadcrumbs

Breadcrumbs are a great navigational tool that serves both visitors and search engines. For the visitor it provides them visual indicators as to where in the site they are and how to conveniently move back to higher level categories and sub-categories without having to go back to the main navigation hunt and search.

When you use appropriate keywords in your breadcrumbs, you are also provide strong keyword clues to the visitor as to the content of your pages. This allows you to use headings for more than displaying a category title, since the breadcrumb covers that for you. You then have more freedom to make your headings more compelling than you otherwise might be able to if you’re trying to provide a top-level category indicator.

Using those keyword indicators in your breadcrumbs is also an assist to your search engine optimization efforts. These breadcrumbs are keyword-rich on-page links to the page on your site that is the best representation for those keywords. Outside of your main navigation, breadcrumbs give you some of your best internal linking opportunities.

In addition, breadcrumbs help build stronger search hierarchy of your category, sub-category and product pages. This internal crosslinking helps the search engines understand the relationships between these pages to determine the importance, level and value of each, in accordance with it’s place in the structure. While generally breadcrumbs do nothing more than mimic the “trail” that brought the visitors to that page, it provides additional levels of linking that the main navigation can often not support.

Use Keyword-Rich Link Text

One of the important and beneficial factors of breadcrumbs, as I mentioned above, is that you can use keywords linking to the page that best represents those keywords. This keyword-in-link strategy needs to be used beyond your navigation and breadcrumb structure. It needs to be used throughout your site whenever you are linking to other content or pages of your site.

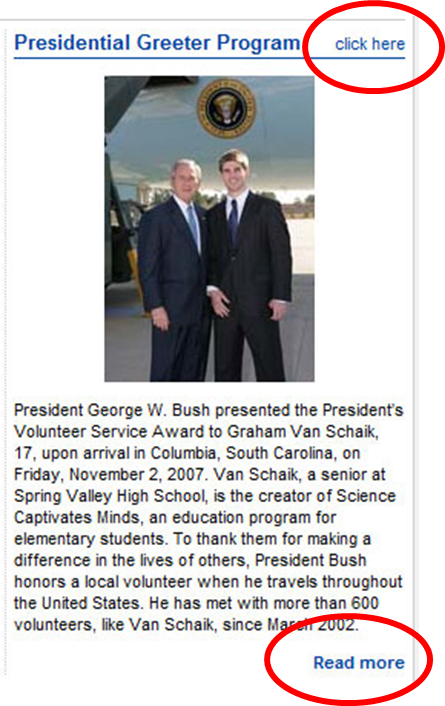

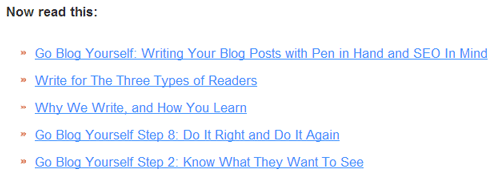

The image below shows an example of how many people link to other websites. The link text reads “click here” or “read more”. Or in this case, both! The problem with this type of linking is the words in the link don’t provide any indication as to what information the visitor will be getting if they do click. They have to read the content around the link to know for sure. The search engines are given no indication whatsoever as to what the linked page will produce either.

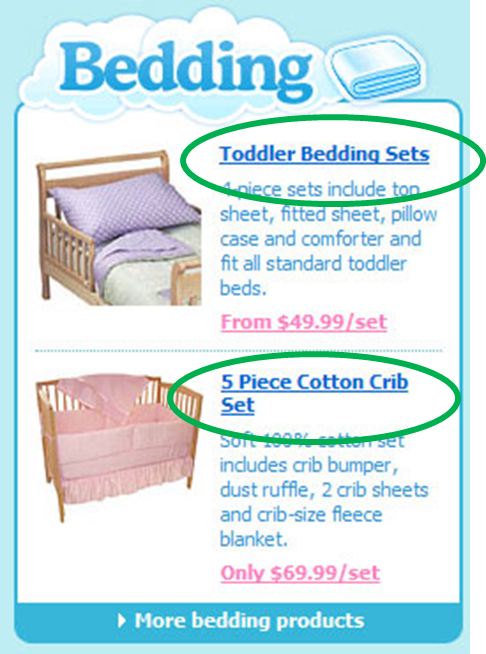

However, if you look at this next image, you’ll see that the links themselves provide both the search engines and shoppers a clear indication as to what information they’ll be taken to once they click on the link. This extra indication provides search relevance to Google’s algorithms and an additional tag of relevance.

Adding in links to paragraph text, not just headings as used in the images above, you can employ the same strategy. For example, instead of:

Click here to learn more about preparing personal tax returns.

You can can simply say:

Learn more about preparing personal tax returns.

Many people don’t like that because the link doesn’t have the “click here” call to action. That leaves you with two alternatives:

Click here to learn more about preparing personal tax returns.

or

Click here to learn more about preparing personal tax returns.

Neither of these are great options, though I would opt for the second over the first. Using two links to go to the correct page may not give you the keyword value you’re looking for as search engines often determine that the link text used in the first link to the page is the best text to use, and they choose to ignore the rest. The second option is still quite cumbersome, but workable.

As a general rule, if your content mentions something where more detailed information is found on another page, then you want to link to that information using keywords. With that said, don’t go overboard and flood your text in a sea of blue underlined links. Or a sea of any colored links, for that matter. Keep a decent balance between content for the page and links pointing to other pages.

Link to Related Topics or Products

One of your greatest opportunities to link to content using keywords is to link to related topics or products. Each link to a page on your site adds additional relevance to that page, in relation to the number of links to other pages on your site. But regardless of how much weight any particular link holds, the keywords in that link provide added value and significance.

When linking to related, similar, or “customers also bought” products, you’re doing more than adding link equity to your site. You’re giving your visitors more of what they might want. In the example below, the website assumed that my interest in Battlestar Galactica might also mean I’m interested in Firefly and Stargate TV shows. Guess what? They are right! Both of those are shows I enjoy and if I didn’t already have Firefly on Blu-Ray, I might have bought it at the same time.

You can do something similar with your blog posts by installing a plugin that automatically pulls in other blog posts of a similar topic.

Each of these blog posts might be of interest to the reader of the post these links are displayed under and, if the blog post titles are keyword optimized, more keyword weight is passed on for the algorithms to factor in.

What Do You Do With Hundreds Upon Hundreds of Products?

E-commerce sites have an especially large challenge when it comes to managing their internal link structure, while giving their visitors options for filtering products without creating duplicate content or having the search engines index an endless loop of pages. I’m not sure I’ll be able to produce the “perfect” solution, but I can provide some tips and pointers to help you develop your internal linking of products and categories to eliminate many of the potential problems.

Filters are a great way to drill down to find the product that best meets your need. The problem with filters, from a search engine perspective, is they can either create an endless loop of options, leading to URL after URL that displays a list of products that have very little difference from each other. If you prevent these filters from getting spidered by the search engines then you, potentially, are losing good landing pages and, at the same time, are feeding the search engines category pages with a long list of product links. Somewhere there has to be a balance between duplicate filter pages and fewer products per page.

The place I would start is figuring out which “filters” would be worthy of having their own optimized page. For example, filtering by brand would give you a great page to optimize for brand keywords. However filtering by color probably doesn’t warrant its own optimizable landing page since very few people are searching everything in the color blue. They find things they want then see if they come in their favorite color. Though this might depend on your industry. For some, color might actually make a good landing page.

Once you have an idea of which filters warrant their own landing pages, then build those filters to generate unique URLs with unique content. The rest of the filters can simply be excluded from the search engine indexes, either by using on-the-fly filtering that doesn’t take the visitor from the URL or takes them to URLs that the search engines are prohibited from indexing. (I like the former option better.)

Even still, some of your filter landing pages might have way too many products. If this is the case, you might look at even more sub-level filter landing pages to reduce the number of products per page. Otherwise, I suggest creating a page that displays all products, rather than forcing the search engines to spider page 2, page 3, page 4 and so on.

Again, I can’t say these are perfect solutions, but they may get you moving in the right direction.

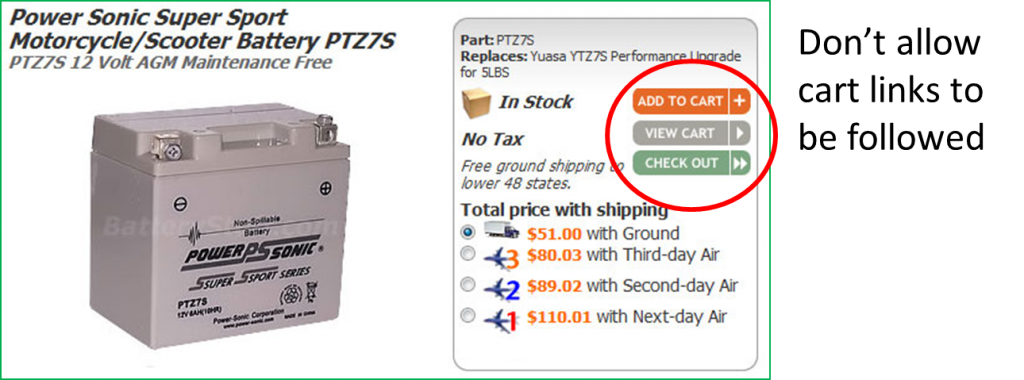

Don’t Link to Shopping Cart Pages

Search engines are not shopping, so they don’t need to be placing items in your shopping cart or attempting to check out. All such links need to be written in such a way that the search engines cannot “click” on them, or follow them. Usually this can be done by using JavaScript rather than HTML links, however your developers may come up with a unique solution for your own needs.

However you implement, the end result needs to be unspiderable links to add products to cart. But don’t stop there; do the same for links to write reviews, printer friendly pages, add to wishlist, add comments, and other links that typically create pages that the search engines don’t need to be getting into. While you can simply keep the search engines from indexing those pages, the best solution is to ensure they can’t read the links to begin with.

Strong Internal Search

Internal search isn’t a link issue, but it is a navigational issue, and can lead to some linking problems. Let’s start with the navigation side of things.

To start, every site approaching a couple dozen pages could use an internal search. Your main navigation should do a great job of getting people to the information they want with as few clicks as possible. However sometimes visitors just want to search in order to get what they want more quickly and with less “figuring it out.” A good internal search will do that, but only if it works!

Be very careful about having an internal search that does not get visitors to their destination 90% of the time. If a visitor does a search on your website and they don’t find the results they want, they will leave, assuming you do not have what they are looking for. If you really do, you just lost a sale. It’s better to have no search at all than a search that doesn’t deliver visitors to what they want 90% of the time.

The linking problems internal search can produce is when sites allow the search results pages of their site to be indexed by the search engines. Search engines have been known to “search” sites for random words and characters. This produces a potentially unlimited number of URLs for the search engine to index, most of which won’t provide any value to the site and simply slow down or diminish the valuing process of the rest of the site.

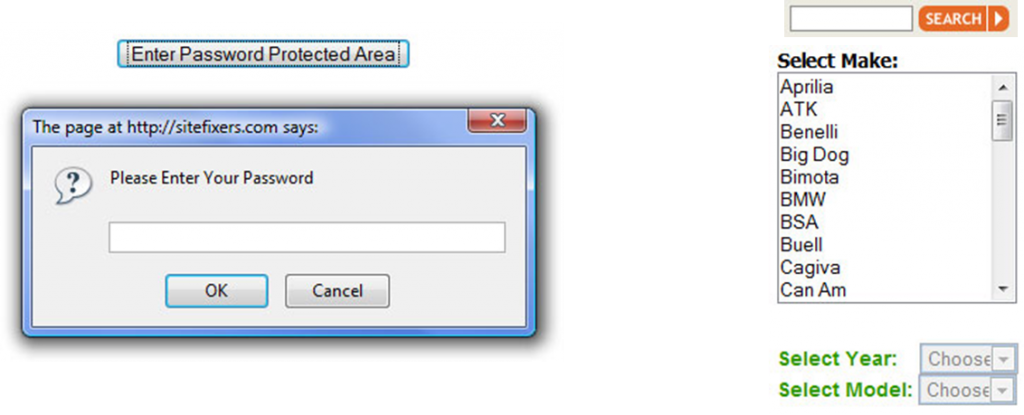

Avoid Blocking Content Behind Forms

Sometimes forms are used to help visitors find content faster, however the use of such forms can often be an inhibitor, keeping search engines away from content that you want in the index so it can rank and drive traffic. Anytime a visitor has to put in a password, or make any kind of form selection, that content will not help your search engine optimization efforts. If it is your intent to keep that information hidden, then you’re fine. However, if you want to use that content to drive traffic, then you’ll need to rethink the way people access it.

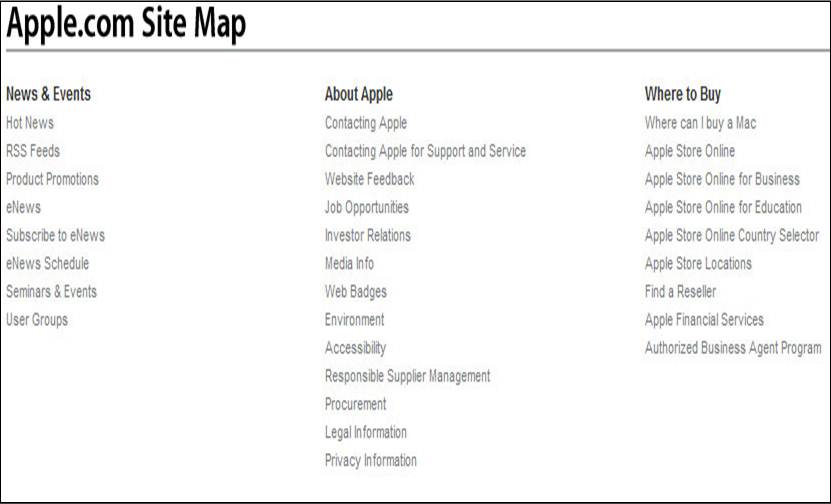

Add Visitor Sitemaps

XML sitemaps are a good way to get your content spidered by the search engines, but don’t use that to supplement an HTML sitemap for your visitors. A good sitemap can be a great way to help visitors quickly navigate to any page on your site in just a couple clicks. It bypasses the main navigation and instead lets visitors see the entire site from a birds-eye view.

It also provides a way to ensure the search engines don’t have to spider every page to get to the pages several clicks away from the home page. It can help get these pages indexed and weighted sooner, and keep them fresher in the index.

Absolute vs. Relative Links

What’s the difference between an absolute and relative link? An absolute link hard codes the entire URL into the HTML:

<a href="http://www.site.com/category/sub-category/page.htm"></a>

A relative link allows you to put in only what’s needed in the code for the browser to know how to get to the destination based on where the visitor currently is:

<a href="../sub-category/page.htm"></a>

There is a lot less code needed for a relative link and many HTML editors, by default, use relative links rather than creating absolute links. Web developers benefit by using relative links when building websites on a testing URL. When the site rolls out they don’t have to worry about changing any of the links because the relative links use the site structure, rather than the domain name, to work. When the developers change the test domain to the main domain, all is well.

However relative links leave room for problems and, in some cases, outright disaster. Without going into all the potential problems relative links can cause, I will say that absolute links are pretty absolute and leave very little room for error when the link is properly formed. The downside is when transferring a site from a test domain to the real domain, you have to ensure those links are all changed accordingly.

Using Search Engine Friendly Links

How your links are coded can make the difference between getting the page spidered by the search engines and preventing the search engines from finding the page.

Good, search engine friendly, HTML links typically look like this:

<a href="http://www.site.com/category/sub-category/page.htm">Link Text Goes Here</a>

If this is what your links look like then you know your links are built right. But for a variety of reasons, some links are coded differently. It’s these links that can be unspiderable to the search engines. Here are a couple examples:

javascript:plnav('h09264','pl','image')

or

JavaScript:window.open(‘http://www.site.com/category/sub-category/page.htm’, ‘newWindow’)

The first example above is completely useless to the search engine. The page this links to cannot be found, and unless there are other search engine friendly links pointing to the page somewhere out there in the world, that page will forever remain outside the search engine index.

The second example is a bit iffy. While the JavaScript link isn’t readily determined by the search engine to be a link, the engine can follow the URL in the JavaScript code to index the page. However, there is no guarantee that the link will count as a link, causing you to lose the link value that helps pages gain authority.

Unless you’re trying to keep pages out of the index, the best recommendation is to use search engine friendly HTML links rather than any type of JavaScript link that may cause spidering issues. Even if the pages can be spidered, you want to make sure the links count as links!

Blocking Pages From Being Indexed

As I mentioned above, there are times when you don’t want pages in the search engine index. There are a few options for blocking those pages. Each has pros and cons as well as merit in certain circumstances rather than others.

Robots.txt File

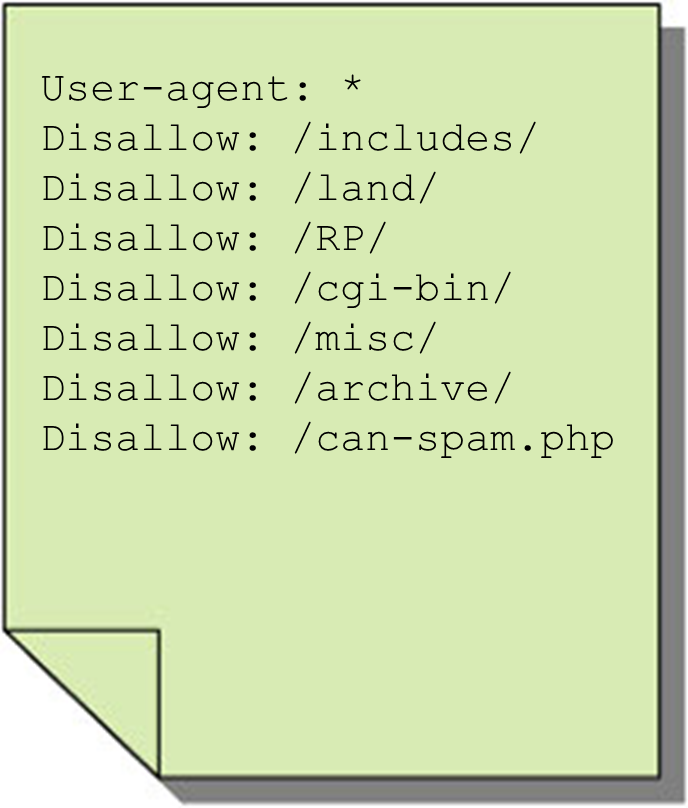

The robots.txt file is your “master control” for telling the search engines what pages to or not to index. When search engines first visit your site they are supposed to first download this file to get their instructions on what to do.

As you can see, it’s a rather simple file, but it’s one you don’t want to get wrong. The main key here is to ensure you’re only disallowing pages or folders that that you don’t want in the index and not inadvertently disallowing something that you do want. There are plenty of tutorials written to help you craft the robots.txt file specific for your needs, including allowing or disallowing certain search engines. Google Webmaster Tools also has robots.txt file helps that you can use.

However, I cannot stress enough the importance of getting this page right. One wrong move can wipe out all your search engine rankings almost instantly.

NoFollow Attribute

The nofollow attribute was originally designed to append to blog comment links to tell the engines that you don’t trust those links and therefore they should not consider them a vote of confidence from you. Providing a vote of confidence to a known spam site can potentially hurt your link profile. However, it quickly evolved into an advertisement attribute. Since Google doesn’t want people paying for links to get link credit they require that all advertising links use the nofollow attribute. Failure to do so could hurt your back link profile and ability to rank in the search engines.

In simple terms, the nofollow attribute tells the search engines not to follow that link:

<a rel="nofollow" href="http://www.site.com/category/sub-category/page.htm"></a>

In reality, the search engines can and will follow these links, however whatever positive or negative value that link would otherwise give you is eliminated.

Using the nofollow should, as with the robots.txt file, be used carefully and with full understanding of the implications. Any time you want to add a link to an untrusted site (or an advertiser) the nofollow attribute should be used. However, if the link is trusted, you’re better off not using it at all.

Robots Meta Tag

Another option for keeping pages from being indexed is to use the robots meta tag on the page itself:

<meta name="robots" content="noindex,nofollow">

Once the search engine spiders the page, the “noindex” portion of the tag tells them not to put it in their index. If it’s already in, it will promptly be removed. If it’s not, it won’t be added.

Using this tag has several downsides. Namely, the search engines have to spider the page before they can receive the instructions not to index it. If you have a lot of these pages, then the search engine will use up resources spidering pages not to index them rather than to use their resources on pages you want in the index. Plus, you are potentially using valuable link equity by linking to these pages that won’t do you any good.

With that said, sometimes you need pages to be found because the links in them cannot be found easily on any other page. A good example of this would be a tag page in your blog. The tag pages aren’t good landing pages by most counts so you don’t want those in the search index. But you do want the search engine to follow those links to the blog post. Using the “noindex,follow” attribute tells the engines not to index but to go ahead and follow the links.

If you don’t want the pages indexed or links to be followed then go with “noindex,nofollow.” On the other hand, if you want the page indexed you can use the “index” command. However, since search engines will index by default, this adds nothing as the search engines don’t really do as commanded.

Keep Fixing Broken Links

Broken links happen for a variety of reasons. Sometimes you’ve removed pages or you’ve linked to a page on another site that has been moved or removed. For this reason it’s a good practice to regularly check for and fix any broken links you find. This can become a chore for blog sites as blogs often do a lot of external linking and there is a huge amount of turnover on the web. But once you keep up, a monthly check or so should do the trick to help you keep broken links under control.

301 Redirect Moved or Removed Pages

Even if you remove or change the link to any pages that no longer exist on your site, it’s good practice to 301 redirect those links to the current relevant content. Without the 301 redirects you lose all the link equity built into those old pages. Even if just one site links to it, you should implement the redirect, unless you can request that the link on the external site be changed.

With 301 redirects in place, you not only maintain a significant percentage of your link equity for each page removed, you also are able to keep visitors on your site that may visit those pages through their browser bookmarks, should they have any. You never know how many people have bookmarked that content. Without the redirect in place there is a good bet the visitor will move on to another site. That could have resulted in a new link or even a sale that you won’t be able to capture without the redirect in place.

And who said internal linking was easy? There are certainly a lot of nuances to maintaining your internal link and navigational structure. Without any one way to do it right, there’s a lot of ways of doing it wrong that can be detrimental to your online success.

I should also note that there are other issues regarding linking and navigation that were covered in The Complete Guide to Mastering Duplicate Content Issues. But for the sake of brevity (too late) and avoiding duplication (pun intended) I did not include that information in this post. Follow the link above to read all about those!