There is little doubt that duplicate content on a site can be one of the biggest battles an SEO has to fight against. Too many content management systems are designed for and work great with various types of content, but they don’t always have the best architecture in regards to how that content is implemented throughout the website.

There is little doubt that duplicate content on a site can be one of the biggest battles an SEO has to fight against. Too many content management systems are designed for and work great with various types of content, but they don’t always have the best architecture in regards to how that content is implemented throughout the website.

Two Types of Duplicate Content

First, let’s start by looking at the two types of duplicate content: onsite and offsite.

First, let’s start by looking at the two types of duplicate content: onsite and offsite.

Onsite duplicate content is content that is duplicated on two or more pages of your own site. A good example of this is a product that displays on two different URLs based on how it was found, or a blog post that shows up in full on both a category filter and a tag filter.

Offsite duplicate content is when content is displayed both on your website and on another. This is typical of boilerplate product description that is used on just about every site selling that product, or when you syndicate a blog post on a third party site.

Both types of duplicate content can be problematic.

Why is Duplicate Content an Issue?

[inlinetweet prefix=”” tweeter=”” suffix=””]While search engines have said there is no duplicate content penalty, it can still hurt your search marketing efforts.[/inlinetweet] The best way to explain why duplicate content is bad is to first point out why unique content is good.

Having nothing but unique content on your site allows you to set yourself apart from everyone else. It makes you different from your competitors because the words on your pages are yours and yours alone. In a battle for uniqueness, this is your win!

On the other side of that, when your site displays content that can be found somewhere else, whether it’s a boilerplate product description or information pulled from somewhere else, you lose all the advantage that being unique brings you. If we believe search engines want to show unique results, duplicate content creates a struggle between the dupe content, and only one can win.

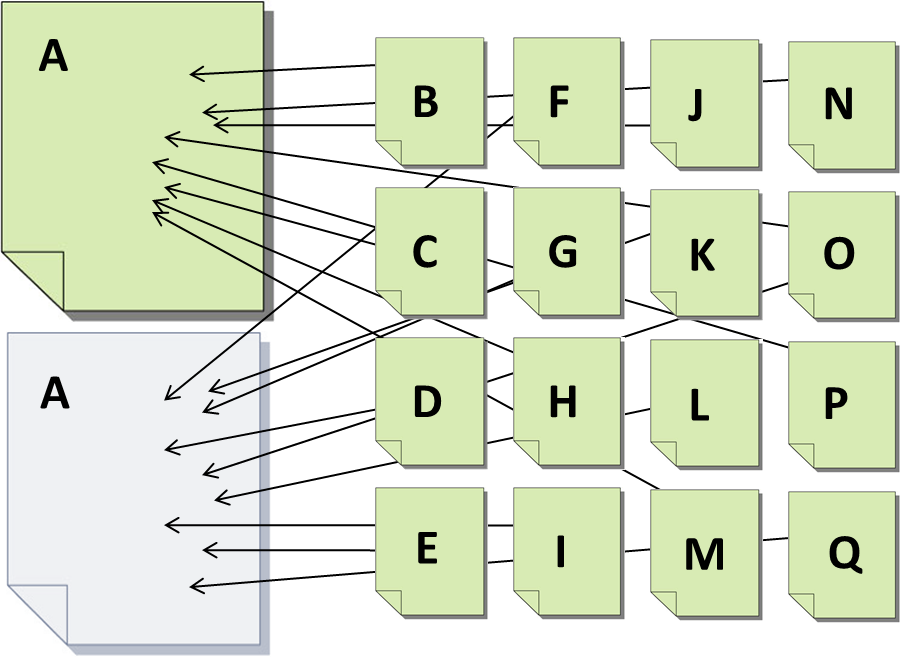

The illustration below shows two pages (A) that are duplicate. Pages B-Q link to those two pages in various forms. Which one should the search engines rank over the other? If you own both (A) pages you may not think it matters, but it does.

Here there are two likely outcomes:

- If you won both pages, the ranking (A) page is less valuable because it only has half the links. The unranking (A) page is stealing authority. That means the ranking page (A) won’t rank as well as it should and a competitor will take top spot.

- If you only own one of the pages, Google has to decide which one wins. That may be you, but it may not be. It’s a gamble. But even if you do win, you still have the issues with #1 above, which means you’re always on the precipice of losing.

Each duplicate URL of content may receive links, giving neither page the full value of the link juice pointing at that valuable content. However, when content can be found on one and only one URL, you’re in no danger of splitting value between pages.

Essentially, duplication essentially lowers the value of your content. Search engines don’t want to send people to several pages that all say the same thing, so they look for content that is unique. Unique content helps you compete by giving you an advantage of uniqueness.

There is a secondary issue with duplicate content as well, and that is of search spidering. When the search engines spider your site, they pull each page’s content and put it in their index. If they start seeing page after page of duplicate content, they may decide to use their resources somewhere else. Which means fewer unique pages of your site will get indexed overall.

Dealing With Offsite Duplicate Content Problems

Offsite duplicate content has two main sources you can blame: it’s either your fault or someone else’s! At its core, it is either content you took from someone else or content someone took from you (or you gave it to them). Whether legally, with permission or without, offsite duplicate content is likely hurting your site from performing better in the search engine rankings.

Content Scrapers and Thieves

The worst content theft offenders are those that scrape content from across the web and publish it on their own sites. The result is generally a Frankensteinian collection of content pieces that produce less of a coherent finished product than the green blockhead himself. Generally, these pages are designed solely to attract visitors and get them to leave as quickly as possible by clicking on ads scattered throughout the page. There isn’t much you can do about these types of content scrapers, and search engines are actively trying to recognize them for what they are in order to purge them from their indexes.

Not everyone stealing content does it by scraping. Some just flat out take something you have written and pass it off as their own. These sites are generally higher-quality sites than the scraper sites, but some of the content is, in fact, lifted from other sources without permission. This type of duplication is more harmful than scrapers because the sites are, for the most part, seen as quality sites and the content is likely garnering links. Should the stolen content produce more incoming links than your own content, you’re apt to be outranked by your own content!

For the most part, scrapers can be ignored; however, some egregious violators and thieves can be gone after via legal means or filing a DMCA removal request.

Content Syndication

In many cases, content is republished or syndicated into distribution channels hoping to be picked up and republished on other websites. The value of this duplication is in building brand recognition as an author or company. Many people are willing to have their own content devalued a bit in order to increase the branding they receive by syndicating their content.

Typically, syndicated content contains links back to the author site, thereby increasing site authority. However, this only works when the syndicated sites are of sufficient value to actually pass on “link juice.”

The downside is that syndicated content is potentially taking traffic away from your site and driving it to the other publishers. And since some of those sites may have more authority than your own, they are more likely to come up first in the search results. But if your name is on it, more traffic to your content, even on another site, may just be worth it.

Canonical URLs

Search engines make a lot of noise about finding the “canonical” version of duplicate content to ensure the original URL receives higher marks than the duplicate versions. Unfortunately, I have not always seen this play out in the real world in a meaningful way.

Years ago, I asked a group of search engine engineers a question about this. My question was that if there are two pieces of identical content and the search engines clearly know which one came first, do links pointing to the duplicated version count as links to the original version? At the time they could not answer, and I doubt this is or ever will be the case.

Generic Product Descriptions

Some of the most common forms of duplicate content are due to boilerplate product descriptions. Thousands of sites on the web sell products, many of them the same or similar. Take for example any site selling books, CDs, DVDs or Blu-Ray discs. Each site basically has the same product library.

Where do you suppose these sites get the product descriptions from? Most likely the movie studio, publisher, manufacturer or producer of the content. And since they all, ultimately, come from the same place, the descriptive content for these items is usually 100% identical.

Now multiply that across millions of different products and hundreds of thousands of websites selling those products. Unless each site takes the time to craft their own product descriptions, there’s enough duplicate content to go around the solar system several times.

So with all these thousands of sites using the same product information, how does a search engine differentiate between one or another when a search is performed? Well, first and foremost, the search engines want to rank unique content, so if you’re selling the same product, writing your own unique and compelling product description gives you the advantage.

But for many businesses, writing unique product descriptions for hundreds or thousands of products isn’t feasible. Which means you’re at a disadvantage on the duplicate content front, but that can be overcome by adding other unique and helpful content in other places.

You may not be able two write hundreds of unique product descriptions, but you can write unique content for your product categories and helpful tips and tutorials on your blog.

When search engines encounter sites and pages with duplicate content, they have to look at other things on the site in order to determine who wins the top rankings. In these instances, site authority is a significant factor, as is other content that is unique and helpful to your audience.

Unique content alone isn’t enough to outperform sites that have a strong historic and authoritative profile, however put two semi-equal sites into competition and the one with the most unique content will almost always outperform the other.

Dealing with Onsite Duplicate Content Problems

The most problematic form of duplicate content, and the kind that you are most able to fight, is duplicate content on your own site. It’s one thing to fight a duplicate content battle with other sites that you do not control. It’s quite another to fight against your own internal duplicate content when, theoretically, you have the ability to fix it.

Onsite duplicate content generally stems from bad site architecture or, more precisely, bad website programming! When a site isn’t structured properly, all kinds of duplicate content problems surface, many of which can take some time to uncover and sort out.

Many SEOs don’t focus too much on site architecture because they believe that Google will “figure it out” on their own. The problem with that scenario is it relies on Google figuring things out. Yes, they can determine that some content is duplicate and treat one of the versions as the “canonical” version. But there is no guarantee that they will.

Just because your spouse is smart isn’t license for you to go around acting like a dumbass. And just because Google may figure out your duplicate content problems and “fix” it with their algorithms is no excuse for not fixing the problems to begin with. Why? Because if Google fails to fix it correctly, you’re screwed.

Here are some common in-site duplicate content issues and how to fix them.

The Problem: Product Categorization Duplication

Many sites use content management systems that allow you to organize products by categories. In doing so, a unique URL is created for each product in each specific category. The problem arises when a single product is found in multiple categories. The CMS, therefore, generates a unique URL for each category that product falls under.

I’ve seen sites like this create up to ten URLs for every single product page. This type of duplication poses a real problem for the engines. A 5,000-product site has 50,000 product URLs, 45,000 of which are duplicates.

If there was ever a reason for the search engine spider to abandon your site while indexing pages, this is it. The duplication creates an unnecessary burden on the engines, often leading them to expend their resources somewhere else. This usually leaving you with less authority and presence in the search results.

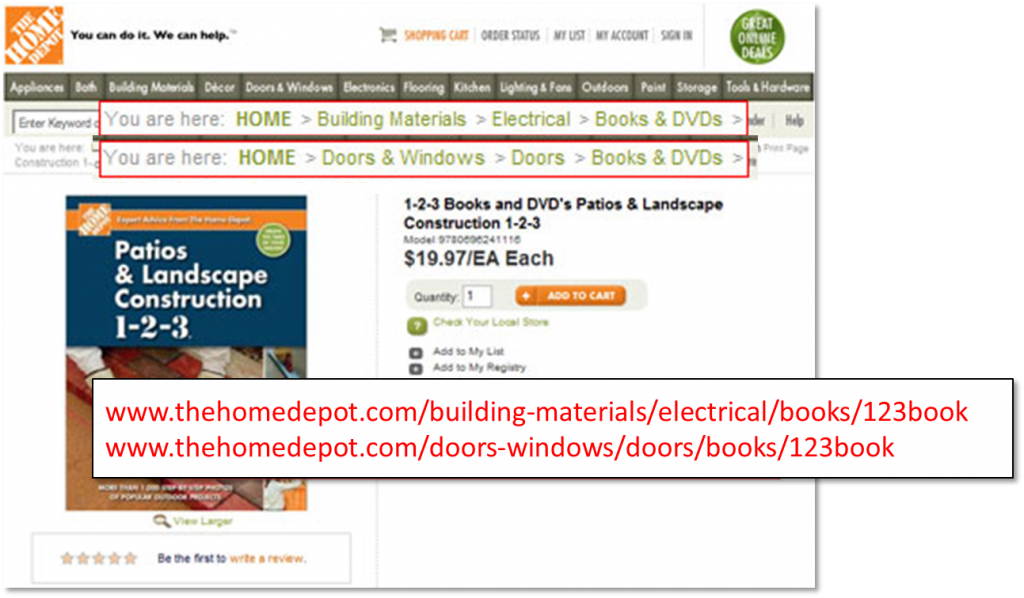

The screenshot above was taken several years ago from The Home Depot’s website. I found a particular product by navigating down two different paths, which you can see by looking at the highlighted breadcrumb trails. Aside from the breadcrumb trail, the two URLs show the exact same content.

This is an example of one product in two categories. But what if the product was tagged in ten different categories? That would create ten duplicate pages!

Now, assume that ten people link to this product. Each page would get an average of one link, whereas if there was only one URL for this product, then the page would receive all ten links pointed to it. Ten links to one page is far better than ten links to ten pages!

The Solution: Master Categorization

An easy fix to this kind of duplication is to simply not allow any product to be found in more than one category. But that’s not exactly good for your shoppers, as it eliminates ways for products to be found in other relevant categories.

So, in keeping with the ability to tag products to multiple categories, there are a couple of options for preventing this kind of duplicate content. One is to manually create the URL path for each product. This can be time consuming and lead to some disorganization in your directory structure, so it’s not recommended.

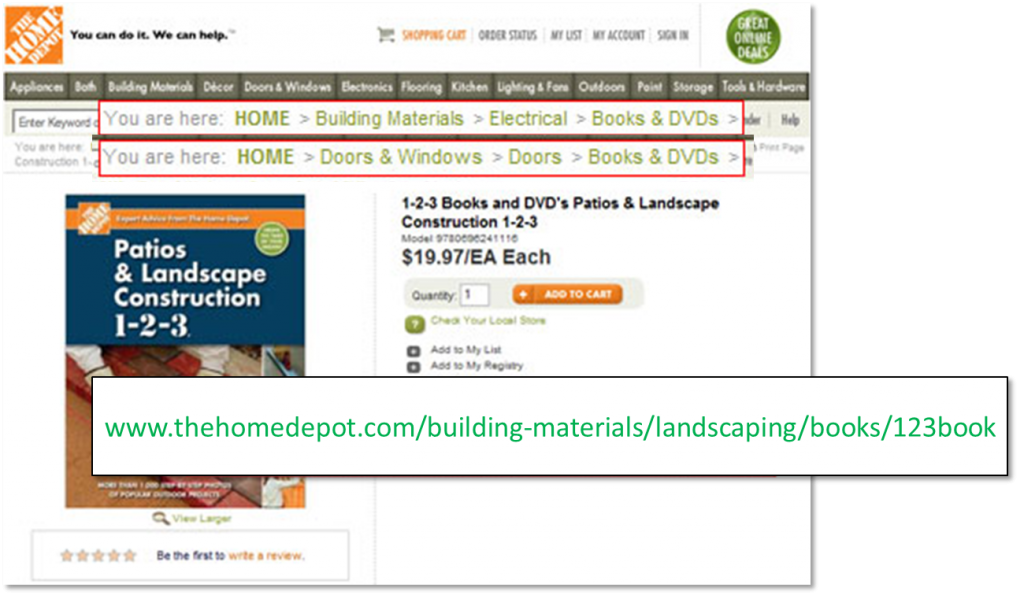

The second, more preferred, solution is to have a master category assigned to each product. This master category will determine the URL of the product. So, for instance, the products below could be assigned to each category so the visitor can have multiple navigational paths to the product, but once they arrive the URL will be the same, regardless of how they found it.

Many programmers attempt to “fix” this problem by preventing the search engines from indexing all but a single URL of each product. While this does keep duplicate pages out of the search index, it doesn’t address the link splitting issue. So any link juice to a non-indexible URL is essentially lost, rather than helping that product rank better in the search results.

Band-Aid Solution: Canonical Tags

Some content management systems won’t allow for the solution presented above. If that’s the case, you have two other options: find a more search-friendly and robust CMS, or implement a canonical tag.

Canonical tags were developed by the search engines as a means to tell the engine which URL is the “correct” or canonical version. So, in our examples above, you choose which URL you want to be the canonical URL and then apply the canonical tag into the code of each and every other duplicate URL product page.

<link rel="canonical" href="http://www.thehomedepot.com/building-materials/landscaping/books/123book" />

In theory, when that tag is applied across all duplicate product URLs, the search engines will attribute any links pointing to the non-canonical URLs to the canonical. It should also keep the other URLs our of the search index forwarding any internal link value to the canonical URL as well. But that’s only theoretical.

In reality, the search engines use this tag as a “signal” as to your intent and purpose. They will then choose to apply it as they see fit. You may or may not get all link juice passed to the correct page, and you may or may not keep non-canonical pages out of the index. Essentially, they’ll take your canonical tag into consideration, but offer no guarantees.

I’m a big proponent of using canonical tags as a backup, but an even bigger proponent of fixing the problem to begin with.

The Problem: Product Summary Duplication

A common form of duplicate content is when short product description summaries are displayed throughout higher-level category pages. Let’s say you are looking for a Burton Snowboard. You click on the Burton link in the main navigation which produces a complete catalog of Burton products and product description snippets, as well as some sub-categories for filtering. You know you only want snowboards, so you select that sub-category – which produces a list of Burton snowboards – and then click on “snowboards.” This leads to a page with various Burton snowboards, each displaying a short product description snippet.

As you continue to navigate through the site, you make your way back to “all snowboards” and find snowboards of all brands along with product snippets – including the same snippets you’ve already seen twice for the Burton snowboards!

Search engines take this kind of duplication into account. However, you still are contending with the unique value issue as noted above. While product category pages can provide a great opportunity for attaining broad-level search engine rankings (i.e. searches for “burton snowboards”), unless they offer something of unique value, the chances of earning top rankings is slim.

The Solution: Create Unique Content for All Pages

The goal is to make each product category page stand on its own as having valuable content and solutions for visitors. The simplest way to do that is to write a paragraph or more of unique content for each product category page. Write content that extols the virtues of the products shown on the page, in this case, Burton and Burton Snowboards. Talk specifics that the visitor may not know about the products in general that may help them make a sound purchasing decision.

If you were to remove the products from your product category pages, it should still remain as a page worthy of being indexed by the search engines. If it is, then the duplicate content snippets won’t matter, as the unique content of the page will hold its value despite the duplication.

The Problem: Secure/Non-Secure URL Duplication

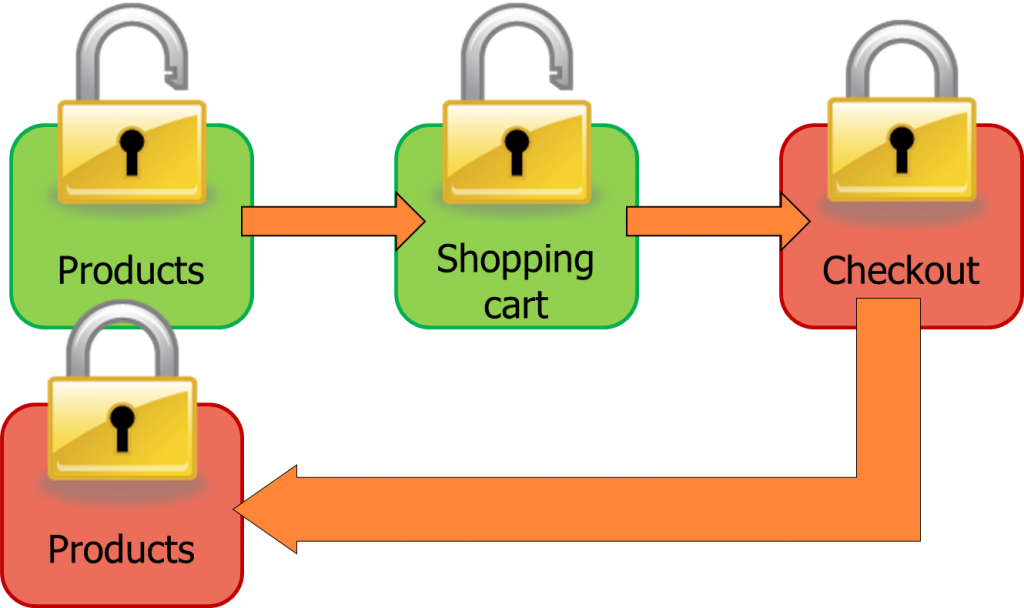

Ecommerce sites that use both secure and non-secure pages are prone to a duplicate content issues between those two portions of their website. The result is similar to the multiple URL issue above but with a slight twist. Instead of just the traditional product URL, the search engines are also able to index a secure version of the same URL.

http://www.site.com/category/product1/

https://www.site.com/category/product1/

You can see that the key difference here is the “s” at the end of the “http.” That indicates that the URL is supposed to be secure.

This type of duplication generally happens when visitors move from the non-secure portion of the site to the secure shopping cart, but before they checkout, they move back out and continue shopping. The links out of the secure shopping cart often contain the “https” version of the URL rather than the “http” version, thus giving search engines access to both URLs.

Google has stated that it won’t matter and they will treat the two version of the URLs the same. However, this goes back to band-aid solutions and/or expecting Google to do the right thing.

The Solution: Use Absolute Links

I have always been a firm believe in using absolute links rather than relative links. An absolute link contains the full URL, including the “http://www.site.com,” while a relative link will contain only the information that is required for the browser to find the page (i.e. everything after the “.com”).

Absolute link:

<a href="https://www.polepositionmarketing.com/about-us/pit-crew/stoney-degeyter/"></a>

Relative link:

<a href="/about-us/pit-crew/stoney-degeyter/"></a>

While you don’t always have to use absolute links, if you are linking from any secure page to a non-secure page you should. Once a shopper is in the secure part of the cart, any and all relative links will automatically link to the “https” version of URLs because that part of the URL is assumed based on the URL currently open. Using absolute links to point back to your products forces the visitor to the correct (http) URL.

At this point you may be wondering why anyone would use relative links at all. Developers like to use relative links so they can easily move a set from a testing server to the live server without having to worry about the site links. Relative links will automatically accommodate the domain change (test.site.com/ to site.com/) without having to change the code because, as I said above, the site is assumed based on the currently open URL.

Also, when dealing with shopping cart pages, it is preferable to prevent search engines from getting into the shopping cart area at all. These URLs should be blocked 100%.

But even that is not enough to solve the duplicate content issue. If a visitor moves from the blocked pages to a duplicate (secure) unblocked product page, that page can get picked up by Google’s index. Using absolute links will ensure that no inadvertent URLs are picked up by the search engines.

The Problem: Session ID Duplication

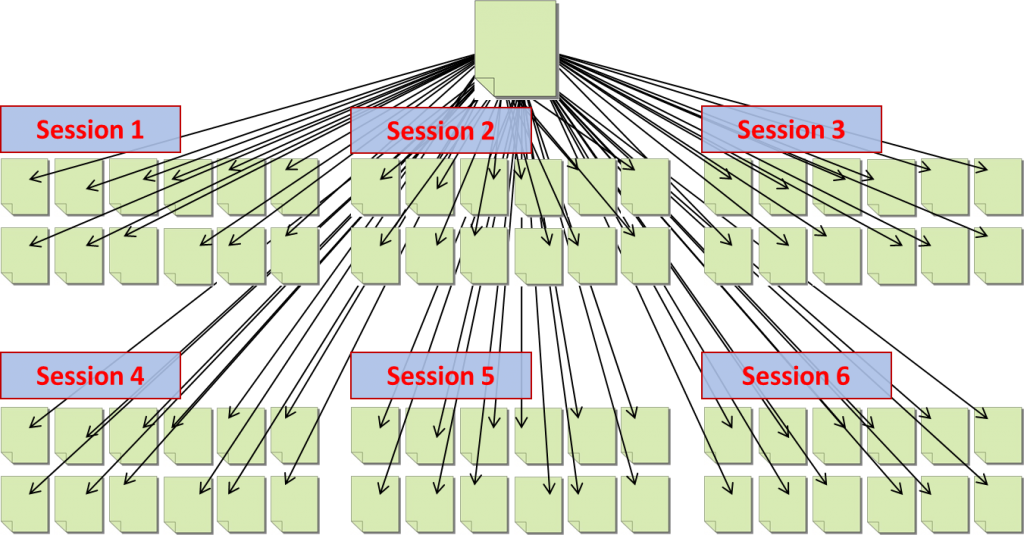

Session IDs create some of the worst duplicate content violations imaginable. While these are not much used today in favor of newer and better technologies, session IDs are still sometimes used as a way to track visitors through a site. Each visitor is given a unique ID number which is appended to the URLs as they move through the site.

Actual URL:

www.site.com/product

Visitor 1:

www.site.com/product?id=1234567890

Visitor 2:

www.site.com/product?id=1234567891

Visitor 3:

www.site.com/product?id=1234567892

That session number follows them through the site and is attached to every URL they visit. And this is where the problem becomes apparent.

Get out your calculators because, we’re gonna do some serious math. Assume you have a 50 page site. Each visitor gets a session ID attached so you have 50 unique URLs per visitor. Assuming you have 50 visitors per day, your 50 page site now has 2500 unique, indexible URLs. Multiply that by 365 days in a year, you’re looking at almost one million unique URLs, all for an itty-bitty 50 page site!

If you were a search engine, would you want to index that?

The Solution: Don’t Use Session IDs

I’m not a programmer, so my knowledge in this area is relatively limited. Here’s what I know: Session IDs suck. There are better ways to do what session IDs accomplish without the duplicate content clinging like gum on the bottom of your shoe! Not only can other options, such as cookies, allow you to track visitors through the site, they do it far better and can keep track beyond just one single session!

I’ll leave it to you and your programmer to figure out which tracking option is best for your system, but you can tell them I said session IDs are the wrong answer.

The Problem: Redundant URL Duplication

One of the most basic site architectural problems revolves around how pages are accessed in the browser. Most pages can only be accessed by their primary URL, but in cases where the page is the first page of a sub-directory there are automatically other variations of the URL that can also access the same content.

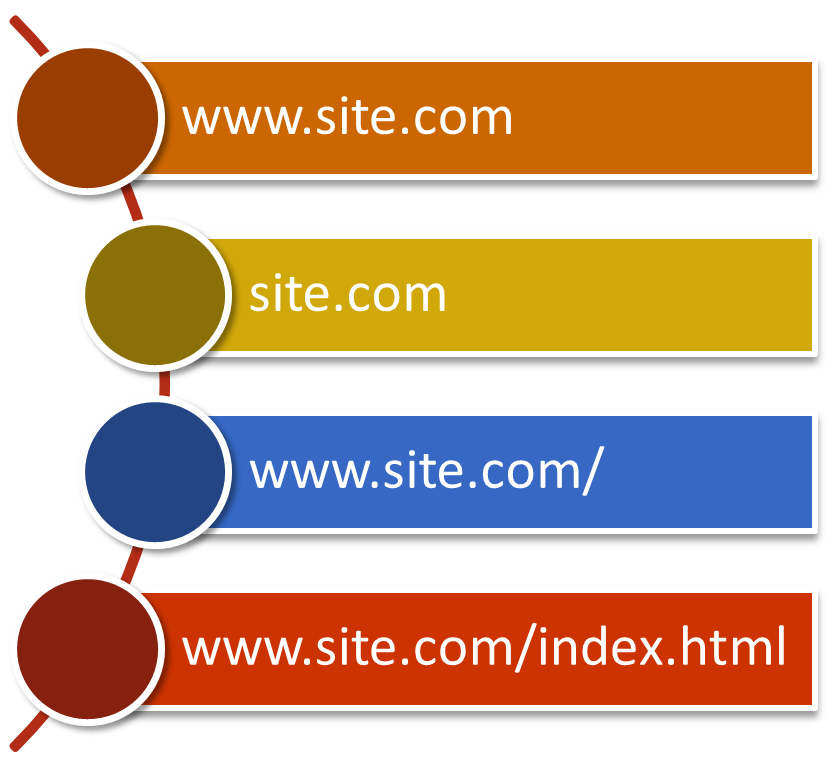

This is illustrated in the image below. Each of these URLs, left unchecked, leads to the exact same page with the exact same content.

This holds true of any page at the top of a directory structure (i.e. www.site.com/page, wwwsite.com/page2, etc). That’s one page with four distinct URLs creating duplicate content on the site and splitting your link juice.

The Solution: Server Side Redirects and Internal Link Consistency

There are a number of fixes to this kind of duplicate content, and I recommend implementing all of them. Each have their own merits but are prone to allowing things to slip through or around. Implementing them all creates an iron-clad duplicate content solution that can’t be breached!

Server Side Redirects

One solution that can be implemented on Apache servers is to redirect your non www. URLs to www. URLs (or vice versa) using your .htaccess file. No need to explain it in detail here, but you can follow that link to get the full scoop. This doesn’t work on all servers, but you can work with your web host and programmers to find a similar solution for the same effect.

This solution works whether you want the www in the URL or not. Just decide which way you want to go and redirect the other to that.

Internal Link Consistency

Once you decide whether your URLs will or won’t use the www, then be sure to use that in all your absolute internal linking. Yes, if you link incorrectly, the server side redirect will handle it. But if for whatever reason the redirect fails, you are now opening yourself up to duplicate pages getting indexed. I’ve seen it happen where someone makes a change to the server and the redirects no long work. It is often months later before the problem is detected, and then only after duplicate pages have made their way into the search index.

Never link to /index.html (or .php, etc.)

When linking to a page at the top of any directory or sub-directory, don’t link to the page file name, but instead, link to the root directory folder. These links are automatically redirected for the home page using the server side redirect, but it isn’t automatically done for internal site sub-directory pages. Making sure all your links are consistently pointing to the root sub-directory for these top level pages means you won’t have to worry about a duplicate page showing up in the search results.

Link to:

www.site.com/

www.site.com/subdirectory/

not

www.site.com/index.html (or .asp, .php., etc.)

www.site.com/subfolder/index.html

Implementing ALL of these fixes may seem like duplicate content fix overkill, but most of them are so easy there is no reason not to. It takes a bit of time, but the certainty of eliminating all duplicate content problems is well worth it.

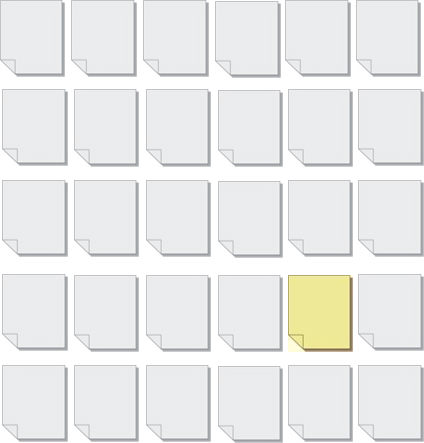

The Problem: Historical File Duplication

This isn’t something that most people think about as a duplicate content problem, but it certainly can be. Over the years, a typical site goes through designs, re-designs, development and re-development. As things get shuffled, copied, moved around, and beta tested, there is a tendency for duplicate content to be inadvertently created and left on the server. I’ve seen developers change the entire directory structure of a site and upload it without ever removing or redirecting the old original files.

What exacerbates this problem is when internal content links are not updated to point to the new URLs. Going back to the developers I mentioned in the paragraph above, once they rolled out the “finished” new website, I had to spend several hours fixing links in content that were pointing to the old files!

As long as these old files remain on the server, and worse, are being linked to, the search engines continue to index these old pages.

The Solution: Delete Files and Fix Broken Links

If you haven’t bothered to remove the old files from your server, a broken link check won’t do you any good. So start by making sure all old pages are deleted. Be sure to back up your site first, in case you accidentally delete something you shouldn’t. Once all old pages are removed, start running broken link checks with a program such as Xenu Link Sleuth.

The report provided should let you know which page contains broken links, and where the link points to. Use that to determine the correct new location of the link and fix it. Once you have them all fixed, rerun the broken link check. Chances are it will continue to find more links to fix. I’ve had to run these checks up to 20 times before I was confident all broken links had been fixed. Even then, it’s a good idea to run them periodically to check for anything new.

I should note that most content management systems render this problem obsolete. CMSs manage the URLs and don’t leave content behind on old URLs when things change. However, if you have any content outside of the CMS (such as PDF documents) this can still be an issue.

Not all content duplication will destroy your on-site SEO efforts; however, some forms of it will definitely impact your performance in the search results. The best duplicate content is found on your competitors’ websites, not your own. And while search engines can “figure out” your intent regarding duplicate content, that is no substitute for fixing the problem.

Replacing duplicate content with unique, purposeful content that has value to the searchers and engines will always put you in an advantageous position against an otherwise non-duplicate-content-free competitor. You still might have other work to do to compete for rankings, but this is one problem that won’t stand in your way.